Hello Everyone! It’s me again, trying to maintain a weekly post cadence!

Today I’m going to talk about some roadblocks I hit while doing a 4.2 VCF Deployment in a real, customer environment. Hopefully this will prevent these issues from happening to you or help you to solve them quickly if they do arise!

Password Policy for Cloud Builder

In VCF 4.2, several changes to password strength were made. It seems that using 8 character passwords are hit/miss (you could get a valid deployment and then immediately a non-valid deployment if you deploy another Cloud Builder with a password like “VMw@r3!!” – I haven’t been able to fully grasp the cause for this behaviour.

In addition, VMware is now a dictionary word, so it wont be allowed. So “VMware1!” and “VMware1!VMware1!” will also fail.

The password that i’ve been using successfully for the initial deployment is “VMw@r3!!VMw@r3!!” – That one works 100% – You can go ahead and use that one.

Hostnames in uppercase

This one is really, really strange – If the hostnames of your ESXi hosts are in uppercase, you will get a ‘Failed to connect to lowercase_hostname’ for all of your hosts when running the validation, and the validation will stop and won’t query any of the host configuration

I spent some time trying to figure this out, at first I thought it was DNS records, but then on a different environment, 3 of the 4 hosts had their hostname in upper case and one of them in lower case, and the one in lower case was the only one connecting, so that made me test the change and suddenly the new host in lowercase was also connecting!

To clarify, ESXI1.VSPHERE.LOCAL will fail, esxi1.vsphere.local will work – Make sure your hostnames are in lowercase

Heterogeneous / Unbalanced disk configuration across hosts

This one is really interesting, let’s say you’re doing an all flash VCF and you have 20 disks per host – The best way to configure it would be 4 Disk groups of 1 Cache + 4 Capacity, so that you would use all 20 disks.

Since you can have at a maximum 5 Disk groups of 1 Cache + 7 Capacity, 40 is the maximum number of disks you can have.

However, make sure that you’re following these two rules for your deployment

- Make sure that the amount of disks follows a multiple of a homogeneous disk group configuration so that all your disks can be used and all the disk groups have the same amount of disks – I.e, if you have 22 disks, there is no way you can use all disks while maintaining all disk groups with the same amount of disks. If you have 22 disks, you can do 3 (1+6) and one won’t be used, or 4(1+4) and two won’t be used.

- Make sure that all your hosts have the same amount of disks. You can check this before installing – In my scenario, validation was passing but it was setting the cluster as hybrid instead of all flash.

After checking that all devices were SSD and were marked as SSD I was really confused. Then I checked and two of the hosts had 2 more disks than the rest. Fixing that made the validation pass and marking the cluster as all flash.

EVC Mode

This one almost made me reinstall the whole cluster…

BE REALLY SURE that you’re selecting the correct EVC mode for your CPU family if you’re selecting an EVC mode in the Cloud Builder spreadsheet.

If you select the wrong EVC mode, Cloud Builder will fail in this deployment, and you won’t be able to continue from the GUI at all. The only way around it is via the API. Otherwise, it is wiping the cluster and starting from scratch!

I’m going to show you how to fix this issue but the method applies in case you need to edit the configuration and then re-attempt a deployment.

First of all, you need to get your SDDC Deployment ID, you can get it with this API call (I will be using curl for this example but you can also use something like invoke-restmethod in powershell or even a GUI based REST client such as Postman)

Get your SDDC Deployment ID

curl 'https://cloud_builder_fqdn/v1/sddcs/' -i -u 'admin:your_password' -X GET \

-H 'Content-Type: application/json' \

-H 'Accept: application/json' \

-kYou can export the output to a file or to a text viewing tool such as less, and then search for the sddcId value

Editing the JSON File

Once you have the sddcId, you need to edit the JSON file that CB generated from the spreadsheet so you can then use it in the API call. I recommend that you copy the file and edit the copy. The file is located at /opt/vmware/sddc-support/cloud_admin_tools/resources/vcf-public-ems/

#COPY THE FILE

cp /opt/vmware/sddc-support/cloud_admin_tools/Resources/vcf-public-e ms/vcf-public-ems.json /tmp/newjson.json

#REPLACE STRING ON FILE

sed -i "s/cascadelake/haswell/g" /tmp/newjson.json

You can also edit the file using vi – in this case I used sed because I knew the string will only appear once in the file and it was faster

Restarting the deployment

Now that you have the sddcId and you’ve edited the JSON file, it is time for you to restart the process using another API call

curl 'https://cloud_builder_fqdn/v1/sddcs/your_sddc_id_from_previous_step' -i -u 'admin:your_password' -X PATCH -H 'Content-Type: application/json' -H 'Accept: application/json' -d "@/tmp/newjson.json" -kMake sure to add the @ before the location of the file when using curl

Once you run this, you should get something like:

HTTP/1.1 100 Continue

HTTP/1.1 200

Server: nginx

Date: Wed, 07 Apr 2021 20:37:08 GMTAnd if you log in to the Cloud Builder web interface, your deployment should be running again! Phew, you saved yourself from reinstalling and preparing 4 nodes! Go grab a beer while the deployment continues 😀

Driver Issue when installing NSX-T VIBs

I ran into this issue after waiting for multiple hours for the NSX-T Host Preparation to finish, and seeing all the hosts on the NSX-T tab being marked as failed.

When checking the debug logs for Cloud Builder, I saw errors like:

2021-04-07T23:06:44.700+0000 [bringup,196c7022580bfc32,5a84] DEBUG [c.v.v.c.f.p.n.p.a.ConfigureNsxtTransportNodeAction,bringup-exec-7] TransportNode esxi1.vsphere.local DeploymentState state is {"details":[{"failureCode":260

80,"failureMessage":"Failed to install software on host. Failed to install software on host. esxi1.vsphere.local : java.rmi.RemoteException: [DependencyError] VIB QLC_bootbank_qedi_2.19.9.0-1OEM.700.1.0.15843807 requires qe

dentv_ver \u003d X.40.17.0, but the requirement cannot be satisfied within the ImageProfile. VIB QLC_bootbank_qedf_2.2.8.0-1OEM.700.1.0.15843807 requires qedentv_ver \u003d X.40.17.0, but the requirement cannot be satisfied within the Im

ageProfile. Please refer to the log file for more details.","state":"failed","subSystemId":"eeaefa1e-c5a2-4a8a-9623-994b94a803a9","__dynamicStructureFields":{"fields":{},"name":"struct"}}],"state":"failed","__dynamicStructureFields":{"fi

elds":{},"name":"struct"}}This is related to QLogic drivers that are included in the HP custom image that was being used in this deployment (and was patched to 7.0u1d which is the pre-requisite for VCF 4.2)

Indeed, these drivers were installed

esxcli software vib list | grep qed

qedf 2.2.8.0-1OEM.700.1.0.15843807 QLC VMwareCertified 2021-03-03

qedi 2.19.9.0-1OEM.700.1.0.15843807 QLC VMwareCertified 2021-03-03

qedentv 3.40.3.0-12vmw.701.0.0.16850804 VMW VMwareCertified 2021-03-04

qedrntv 3.40.4.0-12vmw.701.0.0.16850804 VMW VMwareCertified 2021-03-04None of these drivers were in use, and none of the hosts were using QLogic hardware – So these drivers could be removed without issues, however, it is best to unconfigure the hosts from NSX-T first since that also prompts for a reboot.

Go to the Transport Node tab in NSX-T, select the cluster, and click on “Unprepare” – This will likely fail and prompt you to run a force cleanup – This one will work and the hosts will disappear from the tab.

In my scenario, none of the NSX-T VIBs were installed so no NSX-T VIB cleanup was necessary

Now, it is time to delete the drivers from the hosts and reboot them. You can run this one by one on the hosts (since you already have vCenter, vCLS, and NSX Manager VMs running, you can’t just blindly power-off all your hosts)

esxcli software vib remove --vibname=qedentv --force

esxcli software vib remove --vibname=qedrntv --force

esxcli software vib remove --vibname=qedf --force

esxcli software vib remove --vibname=qedi --force

esxcli system maintenanceMode set --enable true

esxcli system shutdown reboot --reason "Drivers"Edge TEP to ESXi TEP validation when using Static IP Pool

VCF 4.2 removes the need of having a DHCP server on the ESXi TEP network (as long as you’re not using stretched cluster) which is a lifesaver for many, since setting up the DHCP server was usually a light stopper for customers (the other one being BGP)

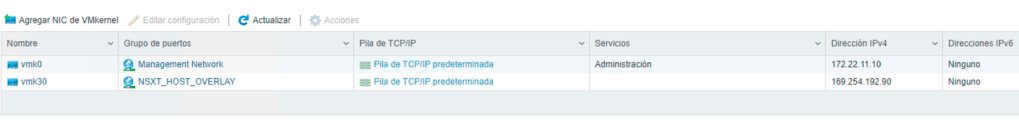

However, the validation still attempts to search for a DHCP server (it doesn’t matter that you configured a Static IP Pool on the spreadsheet) and since there isn’t any, you get a 169.254.x.x IP and the validation fails. For example:

VM Kernel ping from IP '172.22.17.2' ('NSXT_EDGE_TEP') from host 'esxi1.vsphere.local' to IP '169.254.31.119' ('NSXT_HOST_OVERLAY') on host 'esxi2.vsphere.local' failed

Luckily, this is just a validation bug, it is reported internally, and will likely be fixed in the latest VCF release. The issue will not present itself while actually doing the deployment and the TEP addresses will be set up correctly using the static IP Pool

BGP Route Distribution Failure

If your BGP neighboring is not configured correctly on your upstream routers, you will see the task “Verify BGP Route Distribution fail”

021-04-08T05:09:54.729+0000 [bringup,42ba3b72e2ee4185,395f] ERROR [c.v.v.c.f.p.n.p.a.VerifyBgpRouteDistributionNsxApiAction,pool-3-thread-13] FAILED_TO_VALIDATE_BGP_ROUTE_DISTRIBUTION

com.vmware.evo.sddc.orchestrator.exceptions.OrchTaskException: Failed to validate the BGP Route Distribution result for edge node with ID 123b3404-bab6-4013-a9f7-eba3b91b4fafThis means that the BGP configuration on the upstream routers is incorrect, usually, there is a BGP neighbor missing. The easiest way to figure out what’s missing is to check the BGP status on the Edge Nodes

In my case, the Upstream switches only had one neighbor configured per uplink VLAN, so node 1 showed:

BGP neighbor is 172.22.15.1, remote AS 65211, local AS 65210, external link

BGP version 4, remote router ID 172.22.15.1, local router ID 172.22.16.2

BGP state = Established, up for 09:09:51And node 2 Showed:

BGP neighbor is 172.22.15.1, remote AS 65211, local AS 65210, external link

BGP version 4, remote router ID 0.0.0.0, local router ID 172.22.15.3

BGP state = ConnectYou can see that the BGP session for node 2 is not established. After configuring the neighbor correctly on the upstream routers, the issue was resolved!

Conclusion

Deploying VCF 4.2 in this environment has been a rollercoaster but luckily, all the issues were able to be solved.

I hope this helps you either avoid all of these issues (by pre-emptively checking and fixing what could go wrong) or in case it does happen to you, to fix them as quick as possible)

Stay tuned for more VCF 4.2 adventures, next time, with workload domains!

Lucho this was a great post. Thank you for sharing this good information.

LikeLike

Thanks Stalin!

LikeLike

Excelente post !!!

LikeLike

Muchas gracias!

LikeLike